N or Space for next slide, P for previous slide, F for full screen, M for menu

Lec 6: Code and Data

Boaz Barak (instructor), Brian Sapozhnikov (Head TF), Albert Chalom, Alexis Ross, Charles O’Mara, Daniel Chen, David Li , Jambay Kinley, Jane Ahn, John Shen, Josh Seides, Laura Pierson, Pranay Tankala, Raymond Lin, Theresa Nguyen, Wanqian Yang, William Fu, Hikari Sorensen (Extension TF), Eric Lu (Patel Fellow), Yueqi Sheng (Advanced section), Chi Ning Chou (Advanced section).

Laptops/phones only on last 5 rows please.

Patel Fellow: Eric Lu

Email: ericlu01@g.harvard.edu

Can also Facebook message

Please sign up with BSC as well

Go to sections!! (more capacity further from deadline)

Hikari’s section is recorded

What is CS 121 about?

Duality of code and data Feeding programs to other programs or themselves.

Universality One program can evaluate all others.

Completeness and Emergence/Phase transitions All sufficiently rich models are equivalent.

Uncomputability and intractability The dark side of completeness

Role of randomness in computation

Hardness as a resource derandomization, cryptography

Physical world as a computer (e.g., biological systems, social systems, quantum computers,..)

Course outline

Three Theorems

Theorem 1: For every function \(F:\{0,1\}^n \rightarrow \{0,1\}\) there is an \(O(2^n/n)\) line NAND program computing \(F\). (last week)

Theorem 2: There is an \(O(S^2 \log S)\) line NAND program that computes \(EVAL_{S,n,m}:\{0,1\}^{S+n} \rightarrow \{0,1\}^m\), where for \(P\in \{0,1\}^S\) and \(x\in \{0,1\}^n\), \(EVAL_{S,n,m}(P,x)=P(x)\). (today)

Theorem 3: There exists \(F:\{0,1\}^n \rightarrow \{0,1\}\) that is not in \(Size(2^n/(100n))\). (today)

Thm 3: something can’t be done

Thm 1+2: something can be done: algorithms, programming

Heart of this lecture

An algorithm is a piece of text that can be interpreted as instructions for manipulating pieces of text.

“Trivial observation:” Every NAND program of \(s\) lines can be written using \(O(s \log s)\) alphanumeric + whitespace characters

Corollary 1: Can think of an \(s\)-line program \(P\) as an \(S=O(s \log s)\) bit string.

Corollary 2: Can think of a string \(x\) as a program \(P\).

Corollary 3: Sometimes your computer will think of your strings as programs.

Code as Data I: The counting argument

The counting argument

How many functions are there from \(\{0,1\}^4\) to \(\{0,1\}\)? pollev.com/cs121

a. 16

b. 128

c. 1024

d. 65536

Lemma: \(|\{ f | f:A \rightarrow B \}| = |B|^{|A|}\).

Lower bound via counting

Theorem 3: There exists \(F:\{0,1\}^n \rightarrow \{0,1\}\) that is not in \(Size(2^n/(100n))\).

Proof: We’ll show \(|Size(2^n/(100n))| \lt 2^{2^n}\).

Represent \(s\) line program in \(\leq 10s\log s\) length string.

For \(s=\tfrac{2^n}{100n}\), length \(\leq 10\tfrac{2^n}{100 n}\log(\tfrac{2^n}{100 n})\)

\(\leq \tfrac{2^n}{10 n}n = \tfrac{2^n}{10}\).

\(|Size(2^n/(100n))| \lt 2^{2^n/10}\). QED

Restatement: \(|Size(2^n/(100n))| \leq 2^{0.1 2^n}\).

Fact: Can simulate \(s\)-node neural network by \(100\cdot s^3\) line NAND program.

Exercise: 99.9% of functions mapping a \(100\times 100\) black and white image to a 0/1 value cannot be computed by a neural network of fewer than \(10^{500}\) nodes. vs \(10^{11}\) neurons in brain, \(10^{22}\) neurons on earth, \(10^{82}\) atoms in observable universe

Almost no function on 100 by 100 images is computable by less-than-universe-sized neural network!

Restatement: A random function is uncomputable by less-than-universe-sized neural network with probability \(\geq 0.999\).

Exercise

Reconcile the following two facts:

- For any \(n\geq 1000\), 99.9% of functions \(F:\{0,1\}^n \rightarrow \{0,1\}\) cannot be computed by any biological or electronic computer that fits inside the universe.

- Electronic, human and animal computers continually do amazing computations including building bridges and spaceships, solving highly involved equations, proving theorems, recognizing prey from predator, etc.

Big picture

Questions

- Given algorithm \(A\) and input \(x\), what is \(A(x)\)? - execute an algorithm (Math 21a/b/…)

- Given a high level description of algorithm \(A\), express it in NAND or other precise language - program an algorithm (CS 50/…) + compile an algorithm (CS 153).

- Given algorithm \(A\), what is its maximum running time on all length \(n\) inputs? - analyze an algorithm - CS 124

- Given function \(F:\{0,1\}^n \rightarrow \{0,1\}\), what is the best algorithm to compute \(F\)? - analyze all potential algorithms - CS 121.

Code as Data II: The universal program

Theorem 2

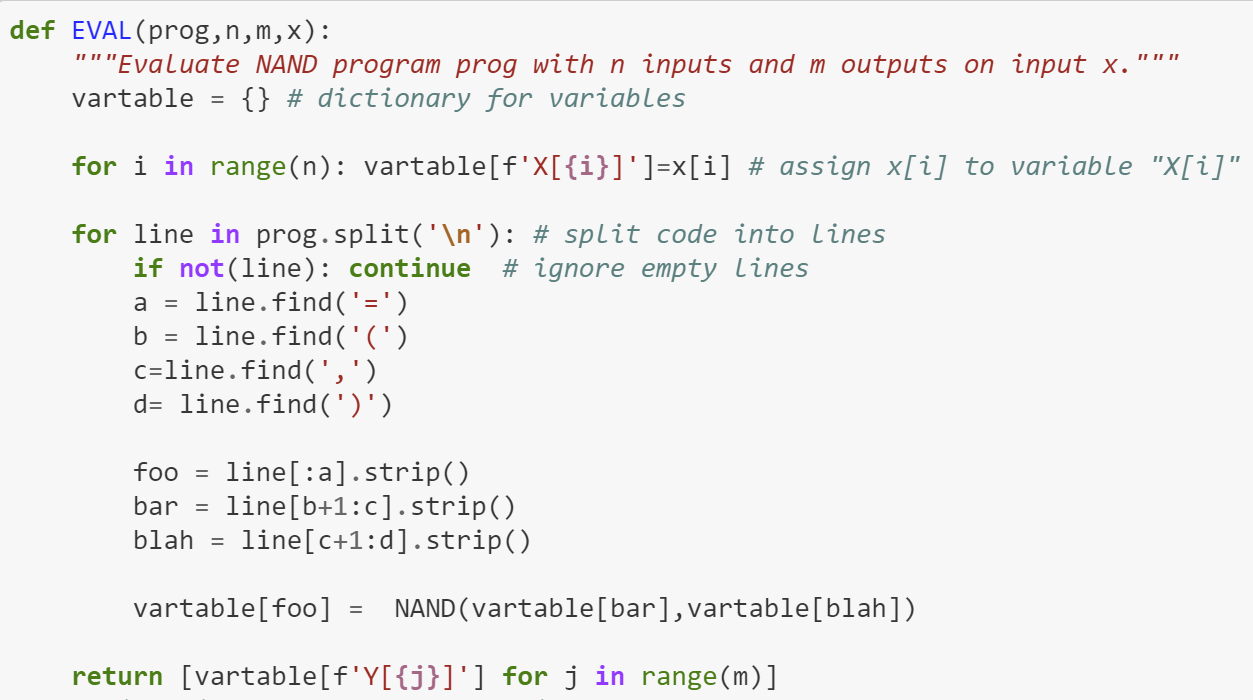

Theorem 2: There is an \(O(S^2 \log S)\) line NAND program that computes \(EVAL_{S,n,m}:\{0,1\}^{S+n} \rightarrow \{0,1\}^m\), where for \(P\in \{0,1\}^S\) and \(x\in \{0,1\}^n\), \(EVAL_{S,n,m}(P,x)=P(x)\). Actually: Only care that it’s \(O(S^c)\) for some \(c\gt0\): i.e., \(poly(s)\).

In words:

Take description of \(s\)-line NAND program \(P(x)\): \(S=O(s \log s)\) bits.

Take input \(x \in \{0,1\}^n\)

- Output \(P(x)\)

Finite function: Obviously computable in \(2^{O(s \log s)}\) lines.

Content of theorem: Can be done in much fewer lines: \(poly(s)\)

Exercise

Let \(EQUAL_n:\{0,1\}^{2n} \rightarrow \{0,1\}\) defined as

Prove that \(EQUAL_n \in SIZE(O(n))\). (i.e., there is \(c\) such that for suff. large \(n\), \(EQUAL_n \in SIZE(c\cdot n))\)

General NAND techniques

Data structures: Use \(LOOKUP\), \(EQUAL\) to implement arrays, lists, etc.. (good enough if you don’t care about the difference between \(O(n)\) and \(O(n^2)\))

\(UPDATE(T,i,v) = T'\) such that \(T'_j = \begin{cases}T_i & i\neq j \\ v & i=j \end{cases}\)

Can “hardwire” constants. E.g. for \(a\in \{0,1\}^n\) compute \(EQUAL_a:\{0,1\}^n \rightarrow \{0,1\}\) that outputs \(1\). Use zero and one.

Replace finite loops or recursion with copy-paste.

Rule of thumb: If your computer can do it in \(s\) cycles, there is a \(\approx s^2\) line NAND program to do it too.

Proof of Theorem 2

Bottom Line

- Can represent a program as a string

- Exists universal program that takes programs as input and can evaluate them.

- For some functions, best program requires few lines (e.g., \(O(n)\), \(poly(n)\)), some functions are inherently intractable (e.g., no program with \(\ll 2^n\) lines)

Next up

Intuitive notion of algorithm: describe in one page an arbitrary length computation.

NAND programs: \(s\) lines \(=\) \(s\) steps. Can access at most \(3s\) locations of memory.

Introduce NAND++: NAND with loops and arrays.

NAND= straightline programs = Boolean circuits

NAND++ = general programs = Turing machines